I would like to share my recent VMware migration experience, which I have completed successfully for a well-reputed organization in Dubai, UAE. As this was a classified project, the customer’s name cannot be shared.

The customer intended to migrate the entire VMware environment to a new hardware since the old hardware was end-of-sale and end-of-support.

In this article, I will share all the steps that I have taken in order to do a successful migration. The hardware was already finalized prior my engagement to the project, meaning the design was ready but the migration strategy and LLD (Low Level Design) weren’t finalized and their I come into the picture and these two are the major aspect for completing the project successfully.

Prepare the following before starting the project deployment and brainstorm it with the customer multiple times in order to understand the existing environment thoroughly and to avoid any contingencies but believe me still we always face some issues :-).

- Project Plan

- Scope of Work

- Timelines for each task

- Parties or Team involved in the project.

- Buffer time to resolve any issues that occurs during the migration

- Migration Strategy/Process

- Monitoring the migration

- LLD (Low Level Design)

- Includes Hardware Details

- Migration Strategy

- IP Scheme Structure and Network Planning

- VMware OS details

- Storage configuration

Project highlights:

- On-premise implementation

- Physical Rack Mount Servers, SAN Storages and SAN/FC Switches.

- All the best practices are followed for the entire project deployment.

I am not mentioning anything about the Backup, which was also a part of the project for which I will cover in my other post once I get time :-).

Let’s Begin….

Source

VMware vCenter – 1 (6.7.0 build-14368073)

VMware Datacenter – 1

VMware Clusters – 5 (ESXi 6.7.0 build-15160138)

Target

VMware vCenter – 1 (Existing vCenter will be used)

VMware Datacenter – 1 (Existing)

VMware Cluster – 3 (New Clusters – ESXi 6.7.0 build-15160138 for Production, Database and Development).

Basic Implementation Steps

- Detailed compatibility check across the hardware and software.

- Physical connectivity between Servers, SAN Storages and SAN/FC Switch.

- Install the Physical Server and upgrade the Hardware firmware, and configure RAID for OS.

- Prepared the customized OS Image for ESXi Host Server, which included the components drivers (Network, HBA, etc…)

- Installed the ESXi Host operating system, assign the HOST IP, and configure the root password.

- Performed step 4 across all the other host servers

- Create a Cluster in the existing vCenter and Datacenter and add the respective host’s servers.

- Configure the SAN Storage with LUNs, LUN Group, Hosts, Host Group and Port Group.

- Configure Zoning on the SAN/FC Switch for mounting the LUNs as Datastores on the ESXi Servers.

- Configure Multipathing, Network, NTP, Syslog etc…

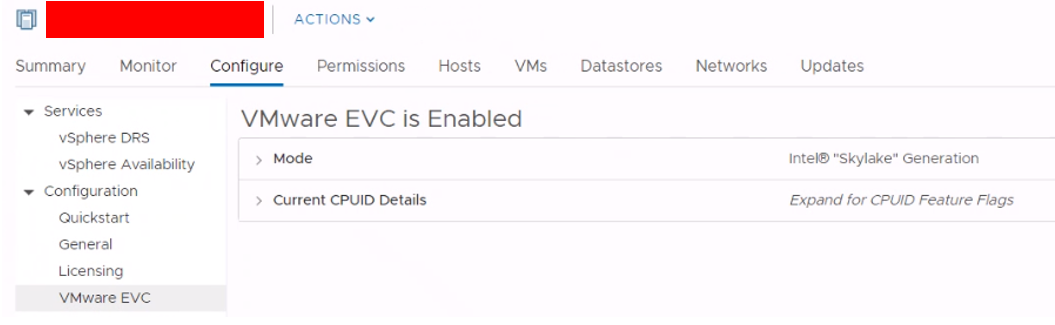

- Enabled EVC on the cluster.

Redundancy and High Availability considered as very important criteria for the project, which includes:

- Network Redundancy for Host Servers

- Dual HBA Card for Host Servers for Datastores

- Dual Storage Controllers

- Dual SAN/FC Switches

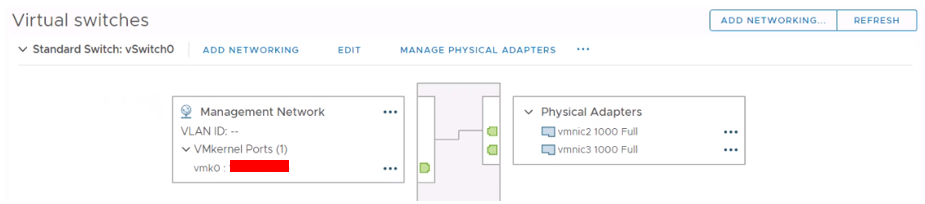

Network Configuration on the Hosts Servers.

- Dual Network Port for ESXi Host Management

- 4 x 1Ge for vMotion Traffic

- 2 x 10GE for Service VLANs (Virtual Machines) along with Port Groups.

All the ports configured as Active-Active by using VMware IP Route Based Hash. Below is an example for ESXi Host Management.

Refer the VMware link for more details:

Datastore configuration on the Host Servers.

Multipathing configured across all the host servers by using Round Robin.

For configuring the Multipathing, follow the vendor storage document.

Note: If Multipathing is configured after mounting the Datastores then you might have to restart the Host Server to come into effect.

NTP Server Configuration on the ESXi Host Servers.

Domain Controller IPs configured as NTP Servers.

Syslog Server Configuration

From Host, Go To Configure, Go To Advanced System Settings, Click Edit, Filter for syslog and assign the Syslog Server IP.

Wait, assigning the Syslog Server IP is not enough, execute the below commands on the Host Server from SSH.

Opening Syslog Ports on Host Firewall

~ # esxcli network firewall ruleset set -r syslog -e true

Reloading the syslog demon

~# esxcli system syslog reload

Virtual Machines Migration

- Prior migration we verified that all the necessary Virtual machines respective VLANs are configured on the 10GE ports as Port Groups and on the Data Network Switch as well. Otherwise VM will get disconnected from the network after migration

- Start migrating Test VMs to the respective Clusters for testing purpose. (Successfully Tested)

- Migrate Productions VMs in batches.

- Upgrade VMware tools for all the VMs

- From all the VMs please edit the settings on the VM and set the CD-ROM to Client Device

- Remove any existing snapshots prior migration

At the time of migration we enabled vMotion on the 10GE ports to speed up the migration process and revert is the back to 4 x 1GE (vMotion).

Issue:

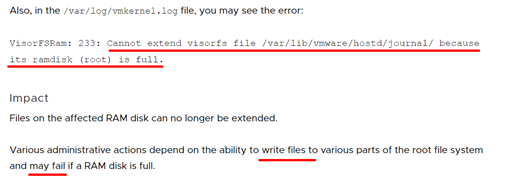

We noticed that after migration random VMs stuck or Hang and requires a forced start.

Root Cause:

Line 5080: 2021-06-30T10:20:17.499Z cpu63:2306107)WARNING: VisorFSRam: 206: Cannot extend visorfs file /VMNAME.tgz because its ramdisk (root) is full.

Resolution:

- Clear the root partition on the Hosts by following the steps mentioned in the below link.

https://kb.vmware.com/s/article/2001550?lang=en_US

OR

- Identify the affected VMs and the cluster. Restart all the HOST Servers in that cluster one-by-one.

- Migrate the VMs to another host server.

- Put the Host in Maintenance mode.

- Restart the Host Server.

- Move the VMs back.

Finally, the issue was resolved and project was closed successfully.

Very helpfull and detailed artical.

Thanks as always Ammar.

You’re Welcome!